Even though sound is at present still very much undervalued and underrepresented in the new media, and often treated as little more than a kind of optional extra, there is every chance that it will have a much increased role to play in the very near future. (van Leeuwen, 1999, p. 197)

Van Leeuwen’s (1999) observation is no small matter when teaching children about composing digital text. People hear sounds around them every day, and even outside the exact contexts, particular sounds can elicit thoughts, memories, feelings, and emotions (e.g., a siren signifies danger or an emergency). Today’s digital technologies offer students opportunities to communicate messages through multimodal approaches (Kress, 1998), as students use multiple modes such as sound and images in addition to words to represent meaning.

With current digital tools music, voice-overs, or transitional audio can be readily inserted into digital compositions. For example, a slide in PowerPoint or Hyperstudio or other programs can be composed of visual image and linguistic text, and audio may also be added so that the sounds play with a slide, across several slides, or between slides. With these new capabilities, digital technologies make different kinds of composing possible.

The increased use of digital technologies in the 1990s and dominance of visual media led some educators and researchers to focus discussions primarily on communicating with visual and linguistic signs (Gall & Breeze, 2005; Kress & van Leeuwen, 1996). Studies focusing on communicating with visual and linguistic signs tend to acknowledge audio signs as a communicative mode, but still do not engage in analysis of audio signs (i.e., Jewitt, 2005). More recently, researchers have begun including the use of audio signs in their analysis of students multimodal texts (Hull & Nelson, 2005; Mahiri, 2006; Ranker, 2008, 2009), but studies focusing on the communicative possibilities of audio signs continue to be underrepresented in the literature.

This article stems from that recognition—that audio signs are theoretically considered one communicative mode (Cope & Kalantzis, 2000; Kress & van Leeuwen, 1996; New London Group [NLG], 1996)—but at the same time, exploration around communicative possibilities with sounds has been limited. Thus, the purpose of this article is to analyze students’ use and teachers’ scaffolding of audio signs (i.e., music, voice-over, narration, and transition sounds) when students are composing a multimodal text in a school context.

At present, elementary educators have extensive pedagogical content knowledge in using words or linguistic modes of communication, because within a school context linguistic signs have historically been privileged over other modes (Bezemer & Kress, 2008; Jewitt & Kress, 2003; Lankshear & Knobel, 2007; NLG, 1996). Escalated by the requirements of the No Child Left Behind Act, this historical focus on the printed word within schools has been reinforced by the continued push of high-stakes testing, which draws almost exclusively on students’ use and knowledge of linguistic text. In addition, the professional development models needed to support teachers’ pedagogical practices around multimodal composing—practices that enable children to compose texts using a variety of sign systems (e.g., print, image, or sound)—are still in the process of being developed.

Many professional development opportunities offered to practicing teachers around the use of digital technologies in elementary classrooms maintain a limited focus on how to use technology or various software programs, neglecting the more diverse communicative possibilities afforded to writers of texts composed through digital technologies (Miller & Borowicz, 2006). Thus, teacher instruction often remains primarily focused on the printed word or the operational use of software programs resulting in “an ‘under realization’ of the potential of new technologies” (Knobel & Lankshear, 2007, p. 77).

Because the communicative power of digital tools is defined by the way classroom teachers use them (Myers & Beach, 2001), discussion around digital technologies in professional development opportunities should go beyond ways to use software and encompass ways to communicate a message effectively through multiple signs. Therefore, a dialog must begin within professional development opportunities so that educators can explore the communicative potentials of various signs available to students when composing digitally. The move to constructing text with digital technologies signifies substantive change for pedagogy and curriculum for elementary educators. Among the content knowledge and skills teachers and students need to develop is how to communicate effectively with various modes or signs beyond print.

The purpose of this paper is to explore, through a close analysis of one class project, students’ use of and the teacher’s scaffolding of audio signs. Two research questions guided this study:

- In what ways did the fifth-grade students use audio signs, specifically transition sounds, when constructing multimodal texts with different sign systems?

- In what ways did the classroom teacher shape the specific social-cultural environment for audio sign use?

The findings of this study argue for professional development opportunities for teachers where they not only learn how to use various software programs but also learn the content knowledge necessary for how to communicate with multiple signs such as audio.

Social Semiotics and Multimodality

This study, focused on the use of audio signs to communicate and represent meaning, is framed within theories of Social Semiotics, Multimodality, and the New London Group’s Pedagogy of Multiliteracies. In the next three sections the following issues are discussed: (a) sign use as influenced by social practice, (b) what authors of multimodal texts consider when representing meaning through signs, and (c) theories of multiliteracies, more specifically the Designs of Meaning framework.

Shaping Sign Use Through Social Practice

The theory of social semiotics (Halliday, 1978; Hodge & Kress, 1988) offers a perspective for recognizing students’ use of multiple signs systems when composing using digital technologies and is considered the foundational theory supporting multimodality (Jewitt & Kress, 2003; Kress & van Leeuwen, 2001). From the perspective of social semiotics, the author not only selects specific signs (i.e., words, visuals, or sounds) to convey a message, but understands the “communicational environment” within which the signs are used.

The signs that people use are created within their cultures. For example, within a western culture the shape of a heart drawn on a piece of paper or cut out of construction paper and the color of pink or red indicates love. Each mode of communication is shaped by the social lives of a particular culture and, thus, understood by the members of that community. When the impact of the social cultural environment on the authors’ choice of signs is acknowledged, the ways in which teachers shape sign use within the classroom context must also be considered. In other words, students in the fifth-grade context of this study would use signs in ways they perceive are appropriate within their particular environment. Thus, inherent to a signs affordance is the cultural and social history of that sign.

Sign Use by Composers of Digital Texts

Ultimately, there are two concerns when authors compose multimodally: what the author wants to represent and the author’s perceptions of what the audience wants when reading (Jewitt & Kress, 2003). (Clearly an author’s identity also plays a role in composition, just as it plays a role in the cultural interpretation of signs; e.g., see McVee, Bailey, & Shanahan, 2008). Authors might ask which signs are effective for representing this information or which signs will engage the readers. Similar to conventional writing, both representing meaning and considering what the audience wants to hear comes into play when composing digitally.

The convenience of newer digital technologies affords potential sign-makers or authors with readily accessible visual signs, such as image and animations, as well as audio signs such as music and sound effects. Using transitions and sounds and inserting images or animations is less complicated than it was prior to the advent of digital technologies. Within this study, social semiotics theory is used to analyze both the context the teacher sets up for audio sign use and the audio signs the students selected to represent their understandings of acid rain’s impact on the environment.

Multiliteracies: Designs of Meaning

According to the NLG, the “Designs of Meaning” framework separates designs into three elements: “Available Design, Designing, and the Redesign” (NLG, 1996, p. 74). The NLG posited that, while students are in the act of designing students and teachers draw from a variety of Available Designs (i.e., social conventions and grammars of different semiotic systems) to transform meaning of a previous text. The six communicative modes or communicative signs are linguistic, visual, audio, gestural, spatial, and multimodal. This theory claims that composers of new genres draw on familiar sociolinguistic practices and grammars of various semiotic sign systems. Semiotic activity is seen “as a creative application and combination of conventions (resources of available designs) that, in the process of Design, transforms at the same time it reproduces these conventions” (NLG, 1996, p. 74). Furthermore, the NLG suggested that when composers design and redesign any new text with resources of available design they attend to “orders of discourse” (Fairclough, 1995), which entails the “generative interrelation of discourses in a social context” (NLG, 1996, p. 13). Employing the principle of design to this study means composers of texts—in this case, multimodal texts—draw from already established genres and conventions when designing (i.e., work done with Available Designs).

Teacher Development

Though access to these digital technologies has increased over the past 10 to 15 years (Snyder, Tan, & Hoffman, 2006), professional development has focused on meaningful multimodal design, where explicit attention to design leading to conscious layering of representational modes to create maximum meaning is rare (Miller, 2008). Instead, many professional development workshops available to in-service teachers are decontextualized “stand-alone workshops” (p. 446) that result in little transfer into the classroom. They often maintain a limited focus on how to use technology or software programs (Miller & Borowicz, 2006).

These types of professional development practices neglect the more diverse communicative possibilities afforded to writers of multimodal texts. Research indicates that teachers tend to under use the communicative potential of various modes when composing multimodally because of their unconscious print bias and, therefore, explicit attention to the orchestration of multiple modes is a solution to this issue (e.g., Bailey, 2006; Miller, 2008; Miller & McVee, 2012; Shanahan, 2006).

The current changes in digital technologies coupled with teachers’ apprenticeships with print-based literacies require that literacy researchers and teacher educators further examine ways to support teachers in recognizing the need to change their practices regarding the orchestration of multiple modes (McVee, Bailey, & Shanahan, 2012). According to Miller and Borowicz (2006) expanding the notion of literacy to include multimodal meaning-making systems beyond printed text for all students is a critical task for schools in the 21st century.

Several scholars have proposed theoretical models of teaching multiliteracies (e.g., NLG, 1996; Healy, 2008; Kalanztis & Cope, 2005; Miller & McVee, 2012; Unsworth, 2001; Zammit, 2010) and models of professional development (Bearne & Wolstencroft, 2007; Leander, 2009; Miller & Borowicz, 2006). For instance, Leander (2009) advanced a solution he called “parallel pedagogy” (p. 148), in which composition is taught by exploring the relationship between new literacy practices and more conventional print-based practices. These practices afford teachers the opportunity to show how “new media has dimensions of old media within” (p. 163), and how particular semiotic resources have certain affordances and limitations with different media. Leander’s parallel pedagogy provides a bridge between new and old media, which would be advantageous in a school context. Miller and Borowicz (2006), referred to an instructional focus for visual design that might include the teaching of page layouts, screen formats, spatial positioning, the use of color or black and white, and gradations of color. Although numerous calls for change have occurred, the shift in focus remains a challenge for teachers.

Designing With Sound

Studies focusing on the communicative possibilities of audio signs continue to be underrepresented in the education literature. Guidance was found instead in the literature on sound design in film making, such as documentaries and fictional films, as well as literature related to designing of software for educational purposes, video gaming, and websites. All of these contexts for sound design shared similar perspectives on how sound is viewed within their fields. Most filmmakers and video-game designers typically add sound in the postproduction phase of development. According to Sider (2003), “sound in film remains, as it has for decades, a more or less technical exercise tacked on to the end of post-production” (p. 5), and only 3% of Hollywood film budgets are allocated for the incorporation of sounds. Bridgett (2010) echoed this perspective, claiming that numerous designers do not consider sound in their design or they consider sounds at the last minute. This tactic results in poorly thought-out sound effects that detract from the visual aspects. Bridgett (2010) and Sider (2003) both argued for a more synergistic perspective of sound and image, where sound is more integrated and functions as more than decoration, add-on, or embellishment for a picture, website, or video game. In addition, both authors called for a more holistic view of composing, in which sound integration is considered throughout the design process from preproduction through postproduction.

Bishop, Amankwatia, and Cates (2008) presented a similar perspective toward sound in the development of educational software. Their research indicated that the guidelines for instructional design provided to designers of educational software regarding sound are not well developed. Thus, in contexts where designers have the capability to integrate multiple modes of communication, sound is often thought of after the fact and is considered ancillary to image and words. These authors argued that new technologies make incorporating sound in learning environments easier, so instructional designers should “exploit the associative potential of music, sound effects, and narration to help learners process material under study more deeply” (p. 482).

Function of Sound

Sounds have multiple communicative functions. They have the ability to convey both literal and nonliteral information. Literal sounds convey meaning that refers the listener to the sound-producing source (Bridgett, 2010). For example, hearing footsteps refers the listener to a person walking or running. When viewers see the source of the sound, like a character speaking, or are referred to the source of the sound, like footsteps, the literal sound is considered source-connected or diegetic sound. Conversely, nonliteral sound is source-disconnected sound or nondiegetic sound, meaning the viewer cannot see and is not referred to the source that created the sound. A voiceover or narrations are examples of a source disconnected, because the viewer cannot see the person speaking. Sound effects and music, which are added to evoke images, abstractions, and emotions, are also considered nonliteral sounds.

Not only can sounds convey information, sounds also serve aesthetic purposes by making the environment emotionally arousing (Bishop et al., 2008) as well as creating mood and conveying emotion (Underwood, 2008). Sound can function as a tool to gain, focus, direct, and maintain viewers’ attention and interest over time (Murray, 2010) leading to a higher level of engagement with the multimedia or film. Further, sound can assist viewers in seeing the interconnectedness among pieces of information (Harmon, 1988; Perkins, 1983; Winn, 1993; Yost, 1993) and convey information like the setting and the mood (Bigand, 1993).

Sound Use in a Documentary

This paper refers to the conventions of sounds that are specific to the way sound is used in documentaries, partly because of the lack of information available in the educational literature on sound use and partly because documentaries and the acid rain compositions by the students in this study are both nonfiction genres. In a nonfiction genre viewers perceive what they are seeing as real. Documentaries typically include a narrator or voiceover with an authoritative tone, which persuades the audience that the events being viewed are real and authentic (Altman, 1992). The use of voiceovers can affect perceived reality (Murray, 2010). In addition, environmental or natural sound is typically used to aide in authenticity. Nonliteral or nondiegetic sounds like music are used to bridge meaning across scenes, manipulate emotional responses from the audience, and portray reality (Altman, 1992).

Synergy of Sound and Image

Although sound has the potential to accomplish these semiotic tasks, sound designers argue that the potentials of sound are not being maximized, and they desire a more holistic view of sound and image than the one currently held (Bridgett, 2010; Chion, 1990; Murray, 2010). They espouse a perspective in which sound is not viewed as ancillary and discussed primarily in the postproduction phase. Chion (1990) captured these sentiments:

The most successful sounds seem not only to alter what the audience sees, but to go further and trigger a kind of ‘conceptual resonance’ between image and sound: the sound makes us see the image differently, and then this new image makes us hear the sound differently, which in turn makes us see something else in the image, which makes us hear different things in the sound, and so on. (p. xxii)

Sound thus affords the potential to be not merely additive to the meanings people construct but multiplicative, in that each sound offers the potential to see images, movements, or other modes in a new way.

An Invitation to Learn: Looking into Mrs. Bowie’s Fifth-Grade Classroom

This interpretive case study (Merriam, 2001) stems from a larger study and was undertaken to develop a deeper understanding of the challenges teachers face with technology integration. The research site for this study was in a small suburban district in a Northeastern city in the United States that provided ongoing professional development and computer access for teachers. The district served 3,864 students; 493 students attended Landers Elementary School. Students were predominantly White, and less than 10% qualified for public assistance. Nearly 83% of students scored at or above the proficient level on the fourth-grade state English/language arts exam. The participants were the classroom teacher, Mrs. Bowie, and six focal students.

Mrs. Bowie was a veteran teacher of 13 years. Her use of technology was connected to more procedural and technical use of technology (as defined by Miller, 2008) with very little consideration of the communicative potentials available through digital technology. She integrated the use of “new technology into traditional classroom practice” (as defined by Apple Classrom of Tomorrow, 1995, p. 16), for example, by using graphic tools, spreadsheets, and word processors.

Mrs. Bowie’s role in the classroom demonstrated her beliefs in a learner-centered environment. She modeled and scaffolded student learning and posed problems for students to solve collaboratively. Mrs. Bowie knew when it came to technology in many cases her students were more technologically savvy than she was. For that reason, the students acted as both collaborators and experts. Mrs. Bowie embraced what Gee (2003) claimed as the shift in stance from teachers as dispensers of knowledge to collaborative problem-solvers.

Mrs. Bowie also understood the pedagogical value of modeling. She began the project by modeling her expectations in the library, which she chose because of Internet access and a computer connected to a projection unit. When she was not modeling, she moved between groups that were designing at worktables and producing at the computers.

Mrs. Bowie integrated the use of digital technologies within an ecosystem unit. Following is the introduction of the acid rain project to her fifth-grade students where they worked as research teams.

Why are the trees dying? How come there are no fish in the lake? Why does the paint on my Dad’s car look so bad? Where does that terrible rotten egg smell come from in our school yard every spring? The answer to these questions is simple; acid rain is responsible for many of the serious environmental problems facing us today. While the answer may be simple, solving the acid rain problem is not. Your task: A local citizens group has hired you, and a group of other researchers to investigate acid rain. You will take on the role of a chemist, biologist or economist and examine the issue from that perspective. Teams of six, two chemists, two biologists, and two economists will work together to create a HyperStudio presentation detailing the problems caused by acid rain and make up a series of recommendations on how to combat this serious issue.

For this project, Mrs. Bowie placed students into four teams of approximately six students. She allowed the students to choose their partners within the teams. Jeremy and Danielle partnered to study acid rain from an ecologist’s perspective. Danielle successfully navigated print-based text (e.g., seventh-grade level) on a higher level than Jeremy (e.g., fifth-grade level). Krystal and April studied acid rain from the chemist’s perspective; both read at the fifth-grade level. The last two partners, John and Abigail, collaborated on the acid rain project from a biologist’s perspective. They read on the seventh-grade level. I purposely selected students reading at or above grade level, because I did not want students’ lack of reading achievement to be a potential reason why the integration of digital technologies into the curriculum was altered.

HyperStudio: The Software

Mrs. Bowie said she purposely selected the HyperStudio software over PowerPoint because in HyperStudio the authors determined the reading path for the readers by inserting links or what HyperStudio calls “buttons,” to link one slide to another. Although PowerPoint also has a hyperlink feature, Mrs. Bowie felt that the students’ knowledge of and experience with PowerPoint led them to perceive and use PowerPoint in more linear ways. Her goal was to move away from the linear reading path of PowerPoint and move students into designing a more open reading path.

HyperStudio is a hypermedia authoring system that afforded the authors opportunities to incorporate the use of text, sound clips, graphics, animations, video, and internal and external links. HyperStudio also requires authors to create hyperlinks between slides in the stack. Individual slides are like webpages, and each stack is made up of slides. Students connect the slides through what the software program calls buttons. The purpose of a button on a HyperStudio slide is to control some kind of action. The most common action is to take the reader to another slide. There are many actions that a button can control, such as playing a sound; showing or hiding an object are also common button actions.

Our Learning Tools: The Data

The design of this study was an interpretive case study (Merriam, 1998). Data analysis occurred recursively as data was collected, inductively analyzed, and reanalyzed. In conjunction with analytic induction, I also used the constant-comparative method, which is compatible with inductive types of data analysis.

Data was collected over a 6-week instructional unit during each 45-minute science block in May and June. Students worked on this project 4 days a week totaling 1,080 minutes. Primary data sources included written field notes of classroom observations, transcriptions of audio-taped data, copies of the completed HyperStudio project, two interviews (i.e., pre and post), and the Developmental Reading Assessment® results indicating the focal students’ reading levels. The teacher and students wore wireless microphones throughout the project to capture the discourse around sign use. Recording these interactions between the teacher and students and students together allowed for a close examination of the students’ sign use when digitally composing multimodal texts. Furthermore, the discourse provided data addressing how Mrs. Bowie shaped sign use.

Initial coding of data included categorizing conversations around sign use from the transcripts into four categories (a) conversations around visual signs, (b) conversations around linguistic signs, (c) conversations around audio signs, and (d) conversations around spatial design. Next, within each category I sorted the conversations into three additional categories: teachers’ direct instruction of the sign, teacher/student conversations of sign use, and student conversations of sign use. Then I sorted the conversations by the content of the discussion as being the operational use of the software or representing meaning through sign use. Through, this part of the analysis I was able to answer the research question about the ways in which the classroom teacher shaped the specific social cultural environment for audio sign use.

To understand how students used audio signs within their multimodal texts, I used their final HyperStudio products and created a sound script (van Leeuwen, 1999). According to van Leeuwen, the purpose of the sound script is to “itemize every individual component of the soundtrack” (p. 201). Because I was analyzing the use of sounds within a HyperStudio composition, I was also interested in the content of each slide that surrounded the transition sounds. Hence, I adapted van Leeuwen’s model of a sound script and included content information about acid rain, including visual representations of content that came before and after each transition sound used, with the goal of contextualizing the sound choice.

Next, I revisited the transcripts to align the final audio sounds with the conversations that were taking place around the choices of audio signs. Criteria used to determine if the students selected an audio sign that contributed to the message or the mood were based in part on the conversations they engaged in while selecting their sounds. The other determining factor was the use of the sign itself. If their conversation dealt with the use of sound to convey a concept to the reader or to convey the idea that acid rain is negative, then the audio sign was categorized as Information/Mood. Sign selections that did not fit this category were labeled as Entertainment. For the Entertainment code the students considered only the reaction from their peers and not the message or mood of the composition in their conversations and sound selection.

Through these various codes I examined teacher scaffolding, student decision making, and the content of the scaffolds. These data illustrated how both “culture and context” (Pea, 1993) played key roles in audio sign use. In order to establish trustworthiness, member checks were conducted by sharing the data analyses, interpretations, and conclusions with Mrs. Bowie as a way to add to the credibility of both my interpretations and findings (as recommended by Merriam, 1998).

Mrs. Bowie’s Scaffolding: Viewing the Pedagogical Landscape

Mrs. Bowie was one of several teachers in the school district who piloted the use of technology in the classroom. With that role she also had the chance to engage in many professional development sessions over the 2-year period. Mrs. Bowie said that the school district offered professional development opportunities focusing on how to use different software programs, such as Microsoft Word, PowerPoint, Excel, and HyperStudio. Accordingly, her instructional focus mirrored the professional development she had engaged in through the school district, which was the use of software programs. The focus on the operational use of software was evident in the goal statement Mrs. Bowie shared with the students about the HyperStudio compositions they were about to write.

My mission this time, besides learning about acid rain, was finally taking a fifth grader who knows HyperStudio and giving them a chance to learn a bit more and expand on it, adding the animation in there and adding more graphics. You’ll see more detail in there.

Consistent with her professional development opportunities, Mrs. Bowie approached the HyperStudio multimodal composition process from the perspective of how to use tools in the software program instead of from a communicative perspective. What is missing from her mission statement and the learning opportunities offered to her students, as well as herself, was a discussion of how to use words, images, animations, and sounds effectively to convey a message about acid rain. In introducing HyperStudio, Mrs. Bowie spent most of the time showing students how to add or insert visual elements and how to work the program.

The focus on the how to use the software was repeated several times throughout the project. For example, when talking with the class Mrs. Bowie said, “I want you to be using as many tools as possible. So I’d like to see drawing tools, I’d like to see text tools. You might end up using a scroll bar.” Once more her focus was on the use of the digital tools that created words and images, not sounds. Additionally, the focus was on operational use of digital tools, not communication with signs produced by digital tools. Further confirmation of the instructional focus was also apparent in the transcripts of classroom conversations when students were composing their HyperStudio multimodal text.

Instructionally, when Mrs. Bowie introduced the unit throughout the first week, she explicitly taught students how to insert images, draw images, and insert animations, but did not address the use of sound. Out of the 111 conversations around visual, linguistic, and audio signs, she initiated no conversations around the use of audio signs. In the three student-initiated conversations she engaged in about audio signs, her comments primarily focused on how to use transition sounds with the buttons in the HyperStudio program. For example, on the 5th day of the acid rain unit Mrs. Bowie responded to a students’ question about recording their voices into the HyperStudio text in the following discussion:

| April: | Now, how do we get it to talk if we want it to talk? |

| Mrs. Bowie: | There is actually, it’s a button as well. And if you go to buttons and it says not animation, what does it say? |

| Krystal: | Voice, play sound. |

| Danielle: | Play sound. |

| Mrs. Bowie: | Play sound, and you can actually record your information. |

April’s question led into a brief discussion in which Mrs. Bowie, Danielle, and Krystal attempted to recall how to record sound into the HyperStudio texts. Notice that there was no modeling or scaffolding of how to incorporate sound as there was for the insertion of an image or animation. Although this conversation was an important one regarding tool use, there were two specific limitations. One was the lack of explicit instruction on how to incorporate audio signs. The other limitation dealt with the communicative nature of audio signs. Specifically, Mrs. Bowie does not offer April guidance in how to represent meaning with sound.

Had Mrs. Bowie been aware of or drawn from the use of sound in filmmaking, more specifically the genre of documentary, she could have demonstrated how sound can function to communicate a more compelling and credible message. For example, in a documentary an authoritative voice-over or narration adds credibility to the perspective that acid rain has a negative impact on the environment. The use of an authoritative voice-over or a narration could encourage readers to see their message as reality and truthful (Altman, 1992). Furthermore, Mrs. Bowie could have expanded students’ knowledge of how visual and audio signs together create meaning. Unfortunately, when a teachable moment about the function of voice-over in a nonfiction genre presented itself, Mrs. Bowie, whether intentionally or unintentionally, let it slip by, not explicitly teaching the technical skill of sound integration or the communicative functions of sounds. Consequently, in my analysis of the HyperStudio artifacts, I noticed that not one student used the recording device in the software program.

A day later the focal students initiated a discussion with Mrs. Bowie about the use of transition sounds, which were literal and nonliteral sound effects (Altman, 1992), during the drafting process right after the group had come to the consensus that acid rain had a negative impact on the environment.

| Abigail: | Yeah, like acid rain. Dunnuh-uh [suspenseful sound mimicking the theme from the movie Jaws]. |

| Mrs. Bowie: | Well you know what… |

| John: | You can do sound effect. |

| Mrs. Bowie: | Yeah, you can put sound effects in there. |

| Abigail: | Acid rain. |

| Group: | Dunnuh. |

| Mrs. Bowie: | ‘Cause maybe what that could be is the opening button to open up the stack. |

| Abigail: | Yeah, acid rain, Dunnuh-uh. Like in big words it should say, “Acid Rain, Dunnuh—It’s Bad!” |

While discussing the use of buttons the students engaged in a conversation on representing the message that acid rain was bad with both words and an audio sign, making the “dunnuh” sound. “Dunnuh” is a nonliteral sound and puts a sensory punctuation mark on the fact that acid rain had a negative effect on the environment. The students attempted to create suspense with music just as Brown, Zanuck, and Spielberg (1975) did in the movie Jaws, where the suspenseful music and visual elements of the boat on the calm water elicited suspense and fear from the viewers.

Visual and audio signs used together are what communicated and created such a powerful message in Jaws, similar to what Abigail was attempting to do. Notice that after John stated, “You can do sound effect,” Mrs. Bowie chimed in and suggested the same as John without expanding instructionally to raise students’ awareness about the communicative value of sound.

Mrs. Bowie’s omission resulted in another missed opportunity to further students’ understanding about where connections with sound can be used to create meaning and influence mood. Equipped with different content knowledge Mrs. Bowie could have shared with the students that transition sounds can effectively create a mood (Bigand, 1998) that could further engage the reader and at the same time evoke the idea that acid rain has a negative impact on the environment. Mrs. Bowie and the students must know that these nonliteral sound effects can gain, focus, direct, and maintain the viewers’ attention over time (e.g., Murray, 2010) if they want to use audio signs strategically. Instructionally, up to this point Mrs. Bowie referred only to the use of images to engage the reader and the linguistic signs to inform the reader. She never discussed the functions of sound.

When considering the pedagogical landscape I analyzed both the finished HyperStudio compositions and the conversations in the classroom. In the focal group’s HyperStudio multimodal composition there were 31 transition sounds used between slides. Interestingly, from the nine total conversations about the use of sound, on the last day of the unit Mrs. Bowie made one explicit statement about the use of audio signs, students initiated three conversations with Mrs. Bowie, and students initiated five conversations about audio signs between each other.

The Authors: Students Use of Audio Signs

The lack of discussion around sound within the classroom context resulted in students not knowing the expectations of sound use. For instance, was sound used for entertainment purposes to make the readers laugh? Or was the purpose to communicate a message while engaging the readers? The lack of explicitness in the classroom environment was evident in students’ final products. Out of 31 transition sounds used in the acid rain multimodal texts, seven links used transition sounds that did not further the message about acid rain or the mood aligning with its negative impact. Figure 1 and Figure 2 represent instances where students heard a sound when listening to the sound library, laughed, and considered only the entertainment factor when they shared their HyperStudio compositions with their classmates.

The chemistry partners used a powerful and intimidating lion roar as a transition sound between the two slides in Figure 1. See also Video 1.

Figure 1. Chemistry partners’ audio sign use.

Figure 1. Chemistry partners’ audio sign use.

Video 1. Chemistry partners’ missed opportunity.

The lion roar did not relate to the content of either slide. The first slide provided information that the pH level of normal rain is 5.5, and acid rain pH levels can be as low as 1, making the rain harmful to the environment. After reading the first slide, the reader clicked on the back button and heard a lion roar. The roar led the reader back to the chemistry home slide. From the transcripts it was evident that the students selected the lion roar because it made them laugh. As Krystal clicked on the lion roar in the sound library she said, “[laughing] That’s it. Pick that one! Pick that one! It’s funny!” Her partner inserted the lion roar transition sound. In this brief interaction, while choosing a sound for transition, there was no discussion about acid rain nor of its harmful effects. In addition, there was no discussion on how to use sound to bridge their message across slides. Consequently, the sound did not add credibility to the nonfiction text.

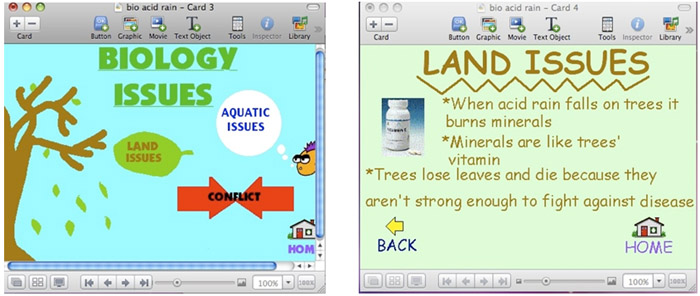

Figure 2 and Video 2 represent another example where two other students (the biologists) inserted a sound that did not relate to the content of either slide.

Figure 2. Biology partners’ audio sign use.

Figure 2. Biology partners’ audio sign use.

Video 2. Biology partners’ missed opportunity

The first slide is the biology home slide, which outlines all the biology issues with acid rain that the reader could learn about, such as land and aquatic issues or the conflict between acid rain and the environment. When readers clicked on the “Land Issues” leaf (i.e., button), readers would hear the trumpeting sound of an elephant; this led readers to the slide that discussed Land Issues. Interestingly, the information on the slide explained how acid rain impacted the minerals trees need to survive.

Taken together, these examples suggest how important it is for the classroom teacher to foster an environment where students understand that digital tools afford writers the opportunity to communicate their message with different signs, such as sounds and images. When using sound, either literal or nonliteral, in documentaries the filmmaker must realize that the audience will expect all events to be real (Altman, 1992). The insertion of a lion and elephant sound in a text about acid rain’s impact on the environment raises a question of credibility. Further, sounds between slides can be used to bridge meaning between slides (Altman, 1992). The sounds used in those examples did not contribute by creating a unified message across slides. The questions both Mrs. Bowie and the students could ask themselves are:

- What meanings are made when I add this sound?

- What effects does using this transition sound have on visual and linguistic signs?

- How does the use of sounds undermine or support the message about acid rain?

- In what ways, if any, does the use of this sound add to the credibility of the composition?

Asking these types of questions about sound use is important, because the students’ multimodal compositions are intended to present factual information about the world. If students use sound that undermines their message then their credibility and leverage to change opinions will be lost.

Mrs. Bowie could have moved one step further and discussed audio and visual signs together. Taking a more synergistic perspective of sound and image, as suggested by those who study sound design (e.g., Bridgett, 2010; Chion, 1990; Murray, 2010), students could ask:

- How does the use of this sound influence the meaning of the image?

- How does the use of this image influence how we hear the sound?

Thus, questions such as these teach students to examine how sound influences image and image influences sound, questions that are essential in multimodal composition. Then writers can determine if the message being portrayed is the one they intended.

The encouraging news is that these two examples represent 7 of 31 transition sounds; thus, only 22% of the sounds the students used did not contribute to the message about acid rain. Students selected audio signs that contributed to the message 24 of 31 times or 78% of the time, suggesting that they are capable of considering communicative possibilities. For example, two students linked the Directions Page to the Danger Signs page with the sound of a child yelling. The sound of a child yelling can signify or provide warning to look for danger signs of acid rain. The use of a literal sound across the two slides paralleled the authors’ message.

Of the 24 audio signs used, students predominantly used sound effects to create a sense of suspense by using a nonliteral sound, for instance, a spiraling sound that increased with intensity as the next slide came into view. Ultimately, further conversation from Mrs. Bowie explicating how to layer meaning across varies signs and engaging in conversations on how these signs are used with a nonfiction film genre like a documentary may have resulted in students having a better understanding of the use of sound to convey meaning with other signs.

While composing their HyperStudio multimodal text, one group of students discussed the use of transition sounds for communicative purposes. Unfortunately, this happened only once. During the drafting process, Abigail stated that her group should have consistency with their transition sounds from one stack of slides to another. The following conversation demonstrates the students’ ability to connect the transition sounds to the overall message of the composition and to use sounds in a way that added coherence to the composition. In the excerpt below, Abigail and John were working on the auditory transitions. They discussed two transitions called “rain” and “dissolve”:

| Abigail: | Instead of doing all different transitions, those things, when we transition, why don’t we try to do a lot of rain ones? Press a button and… |

| John: | Dissolve [name of transition sound] |

| Abigail: | No, Rain. It’s called Rain. There is one called Rain. Try and use Rain. |

| John: | Why don’t we use a lot of different ones so it doesn’t get boring? |

| Krystal: | Yeah… |

In this brief excerpt, the group debated if they wanted to have different transitions for each slide or the same transitions for all of the slides. Abigail selected the Rain transition sound and John selected the Dissolve transition sound. Through this dialog, it was evident that the students attempted to associate the transition sound with the content of acid rain, as both sounds resemble the sound of rain. In addition, John’s comment, “Why don’t we use a lot of different ones so it doesn’t get boring,” indicated that John understood that audio signs functioned to gain and maintain the reader’s attention (as suggested by Murray, 2010). This excerpt demonstrates the students’ ability to connect the transition sounds to the overall message of the composition as well as see that coherence within a text is critical. Unfortunately, in the end they could not come to consensus on the transition sounds to use so they used all different transition sounds, some that signified acid rain content and others that did not. (See Video 3.)

Video 3. Effective uses of transition sounds.

Mrs. Bowie’s Realization: Sound Can Communicate a Message

When the students unveiled their HyperStudio multimodal compositions on the last day of the project, Mrs. Bowie became aware of missed opportunities to discuss the communicative value of audio signs. At the conclusion of their presentations, Mrs. Bowie and the students engaged in a whole class critique:

| Mrs. Bowie: | Let’s critique what you did so that you can help others down the line. First, when it comes to sound. We heard the lion roaring a few times. Was there anything that had anything to do with zoo animals in there? |

| Students: | No [in unison]. |

| Mrs. Bowie: | So was that really an appropriate transition sound? So when you are using buttons [a place to add transition sounds], boys and girls, you want to make sure that the noises that you choose—when you are using the sounds—need to match whatever it is you are talking about, in this case acid rain. |

Mrs. Bowie realized that the students needed to think about the message they were conveying when using sound. If Mrs. Bowie had a deeper understanding of the function of sounds, she could have talked about how literal sounds can be used to convey information or nonliteral sounds, such as environmental sounds, could be used to relate new information to existing knowledge (Gaver, 1993a;1993b). Unfortunately, this comment was the only one she made throughout the 6 weeks that addressed the communicative value of sounds. Further, she did not address the fact that sound, like image, can gain, focus, direct, and maintain readers attention (Bridgett, 2010; Kohfeld, 1971; Murray, 2010).

Her instruction within the classroom context maintained more of a print-based perspective, and the students drew upon this context when using signs. After this class ended, Mrs. Bowie, a reflective teacher, commented “I was so wrapped up in teaching the HyperStudio that I didn’t think about telling the students the appropriate ways to use sound.”

Mrs. Bowie represents many teachers who have to rethink literacy instruction to include discussions around writing with signs beyond the written word. For her entire life, the printed word has been the main focus within the schools she has attended and in the school where she educates children. Consequently, higher education and those who offer professional development opportunities around digital technologies need to continue to teach teachers not only the operational use of software, but also to open up conversations about the potential communicative value of using sound. Equipped with this new knowledge teachers can, in turn, discuss the potential communicative value of sound and other modes with their students. As theories of semiotics posit, the way in which members of a particular culture use tools such as digital technologies impacts how that tool is integrated into society.

Discussion: Moving Beyond Sound As the Optional Extra

The findings in this study support the development of pedagogical practices that raise the status of audio signs as an important mode of communication. Both teachers and students would benefit from considering audio signs as possible communicative resources and as a mode that supports meaning construction when composing and reading digital texts. Cope and Kalantzis (2000) and the NLG (1996) identified audio signs as one of the five modes of meaning within multimodal text; as such, this study also highlights the importance of teaching students about audio as a mode of communication.

Expanding educators’ instructional focus from operational aspects of using technologies to more authentic literacy practices where technologies are used for communicative purposes (Miller & Borowicz, 2006) may assist in broadening the instructional focus to include the orchestration of multiple modes (Kress, 2005). Never has access to composing with multiple sign systems been so available for the general population. Digital tools and software allow students ways to incorporate visual, audio, and linguistic signs into their multimodal compositions.

Nevertheless, the use of technology in a classroom alone is not sufficient (Blackstock & Miller, 1992). For example, in this study, the HyperStudio software had the capability to play music on one slide, across slides or between slides. As pointed out earlier, the only explicit teaching in how to use various tools in the software program were focused on how to insert images, draw images, or use text tools that increased the size, style, or color of the font. No explicit instruction of inserting sound was provided. Consequently, the students did not use certain sounds like narration, voice-over, or music to convey their message. These pedagogical decisions within the classroom context confirmed that sound is “often treated as little more than a kind of optional extra” (van Leeuwen, 1999, p. 197).

Where Ms. Bowie’s teaching of sound intersected with that of filmmakers and video-game, educational software, and web designers is that in most cases each are still treating sound as an add-on or an embellishment. Just as others in sound design have argued for a more holistic perspective of sound within multimedia (e.g., Bishop et al., 2008; Bridgett, 2010; Chion, 1999; Sider, 2008), so too must teachers consider this more holistic perspective when teaching students to compose multimodally. Otherwise, educators will not use the technology or the communicative potential of sound to its maximum potential.

An Illustration From Documentary Film and Parallel Pedagogies

Leander’s (2009) parallel pedagogies is a useful framework for professional development for teachers and for classroom instruction because it incorporates both new and old literacy practices, provides opportunities for learners to develop their conceptual and working knowledge of various semiotic resources through composing, and is driven by comparison and analogy across multiple mediums. The incorporation of new and old literacy practices affords learners the opportunity to see how the new text is similar to and different from the old text. Essentially, through these comparisons teachers can learn about the different affordances and limitations of using various signs and mediums.

Mrs. Bowie asked her students to compose a nonfiction, multimodal text about the impact of acid rain from the perspective of a scientist. Because most students are familiar with films and have seen documentaries in a school context, Mrs. Bowie could have easily begun instruction by examining the use of visual, audio, and linguistic signs in a documentary. Mrs. Bowie might have scaffolded exploration and use of audio signs in the following ways.

Using Voice-Over and Narration. Beginning with audio signs, Mrs. Bowie could have had the students view segments of different documentaries where both voice-overs and narration are used. Then the students could have discussed what effect the narration or voice-over had on the information and credibility of the message. Further discussion about what the sound designers did to create the impression presented would assist students in understanding how to create that credibility. The voice-overs or narrators used in documentaries are typically authoritative and speak directly to the viewer, offering information, explanations and opinions (Brigett, 2010). Likewise, Mrs. Bowie’s students could have used voice-overs or narration to speak directly to the viewer about the impact of acid rain from their perspective.

Music and Sound Effects. Mrs. Bowie and her students could view clips from various documentaries and ask

- What music or sound effect has been added?

- Where has it been added—between scenes or in the background of the narrator’s voice?

- What effect does the sound have on the message, mood, or engagement of the viewer?

- Is the sound used to bridge between scenes? If yes, what meaning is conveyed?

These questions could have led to exploration of various conventions of sound use in documentaries and students could then use this information as a basis to explore sound use in their multimedia presentations.

Using Masking to Explore the Sound Image Relationship. Direct explorations of the sound and image relationship are critical if students are to understand the affordances and limitations of various signs, as well as better understand the concept of layering meaning. The next essential step after critiquing sound use in the genre of documentary would be participating in lessons where students engage in brief learning experiences called masking (Sider, 2003, p. 10). Masking is a technique in which students view a brief animation or film with no sound. The teacher asks the students to interpret what is occurring in the scene. What are they looking at? Which actions stood out to them? The teacher then shows the scene with music, asking similar questions to see if any interpretations have changed. Subsequently, the teacher adds different music with varying rhythms, tempo and intensity to the same animation with the goal of exploring how different music changes the meaning. Some example questions teachers might ask are:

- How does timing of music or a sound impact meaning?

- How does sound influence motion on the screen?

Afterward, moving to a more hands-on experience, students can work at computers and use different sounds with the same animation to alter the meaning. Through composing exercises such as these the students may develop a better understanding of how sound shapes image and image shapes sound.

Focusing on Sound in Pre-and Postproduction. Mrs. Bowie could then present the assignment of the HyperStudio compositions and incorporate discussions and exploration around sound use during preproduction, production, and postproduction in order to direct students toward generating more creative interaction and integration of sound in their multimedia projects (as in Yantac & Ozcan, 2006).

In the classroom during preproduction composers would plan the integration of sound in the early stages of production by envisioning the use of voiceovers, narration, sound effects, and music. Considering the use of sound in the preproduction phase would require the incorporation of instructional time early in the composition process for students to investigate and begin thinking about how to integrate sound. In postproduction composers would make modifications and fine-tune the sound integration.

Conclusion

Mrs. Bowie was a highly motivated, reflective teacher, but she, like all teachers, needs specific examples and models to follow when learning to educate her students about ways to communicate with sound. For teachers to use digital technologies to their fullest communicative capabilities, they must first have opportunities to learn the content knowledge needed. Specifically, analyzing Mrs. Bowie’s instructional focus on the use of sound and elaborating on ways Mrs. Bowie could have shifted the instructional focus creates a portrait of classroom practice that teachers and teacher educators can utilize when attempting to change their own pedagogical practices. If thoughtful ways of exploring the relationship between sound and meaning are not introduced, students will continue to connect fun sounds (e.g., lion’s roar) to their compositions instead of making more thoughtful connections around the communicative impact of sounds. The lack of focus on the communicative functions of sound will maintain the status quo of using sound as an add-on or decoration and result in the underuse of the communicative potentials of sound with digital technologies.

References

Altman, R. (1992). In sound theory/sound practice. New York, NY: Routledge.

Apple Classroom of Tomorrow. (1995). Changing the conversations about teaching, learning, & technology: A report of 10 years of ACOT research. Cupertino, CA: Apple computer. Retrieved from imet.csus.edu/imet1/baeza/PDF%20Files/Upload/10yr.pdf

Bailey, N. (2006). Designing social futures: Adolescent literacies in and for new times. (Unpublished doctoral dissertation). University at Buffalo, State University of New York.

Bearne, E., & Wolstencroft, H. (2007). Visual approaches to teaching writing: Multimodal literacy 5-11. Thousand Oaks, CA: Sage.

Bezemer, J., & Kress, G. (2008). Writing in multimodal texts: A social semiotic account of designs for learning. Written Communication, 25, 166-195.

Bigand, E. (1993). Contributions of music to research on human auditory cognition. In S. McAdams, & E. Bigand (Eds.), Thinking in sound (pp. 231-277). New York, NY: Oxford University.

Bishop, M.J., Amankwatia, T.B., & Mitchell Cates, W. (2008). Sound’s use in instructional software to enhance learning: A theory-to-practice content analysis. Educational Technology Research and Development, 56, 467-486.

Blackstock, J., & Miller, L. (1992). The impact of new information technology on your children’s symbol weaving efforts. Computers in Education, 18, 209-221.

Bridgett, R. (2010). From the shadows of film sound: Cinematic production and creative process in video game audio: collected publications 2000-2010 [Self-published e-book].

Brown, F., Zanuck, R. (Producers), & Spielberg, S. (Director). (1975). Jaws [Motion picture]. Hollywood. CA: Universal Studios.

Chion, M. (1990). Audio- vision: Sound on Screen. New York, NY: Columbia University Press.

Cope, B., & Kalantzis, M. (2000). Designs for social futures. In B. Cope & M. Kalantzis (Eds.), Multiliteraces: Literacy learning and the design of social futures (pp. 203-234). New York, NY: Routledge.

Deutsch, D. (1986). Auditory pattern recognition. In K. Bokk, L. Kaufman, & J. Thomas (Eds.), Handbook of perception and human performance (Vol. 2, pp. 31-32. 49). New York, NY: Wiley.

Fairclough, N. (1995). Critical discourse analysis. London, England: Longmans.

Gall, M., & Breeze, N. (2005). Music composition lessons: The multimodal affordances of technology. Educational Review, 57(4), 415-434.

Gaver, W. (1993a). Synthesizing auditory icons. Proceedings of ACM INTERCHI’93 Conference on Human Factors in Computing Systems. New York, NY: Association for Computing Machinery. Retrieved from http://dl.acm.org

Gaver, W. (1993b). What in the world do we hear? An ecological approach to auditory source perception. Ecological Psychology, 5(1), 1-29.

Gee, J.P. (2003). What video games have to teach us about learning and literacy. New York, NY: Palgrave MacMillian.

Halliday, M.A.K. (1978) Language as social semiotic: The social interpretation of language and meaning. Sydney, Australia: Edward Arnold.

Harmon, R. (1988). Film producing: Low-budget films that sell. Hollywood, CA: Samuel French Trade.

Healy, A. (Ed.) (2008). Multiliteracies and diversity in education: New pedagogies for expanding landscapes. Melbourne, Australia: Oxford University Press.

Hodge, R., & Kress, G. (1988). Social semiotics. Cambridge, England: Polity.

Hull, G.A., & Nelson, M.E. (2005). Locating the semiotic power of multimodality. Written Communication, 22, 224-261.

Jewitt, C. (2005). Multimodality, “Reading” and “Writing” for the 21st Century. Discourse, 26(3), 315-331.

Jewitt, C., & Kress, G. (2003). Multimodal literacy. New York, NY: P. Lang.

Kalanztis, M., & Cope, B. (2005). Learning by design. Melbourne, Australia: Victorian Schools Innovation Commission.

Knobel, M., & Lankshear, C. (2007). A new literacies sampler. New York, NY: Peter Lang.

Kohfeld, D.L. (1971). Simple reaction time as a function of stimulus intensity in decibels of light and sound. Journal of Experimental Psychology, 88, 251-257.

Kress, G. (1998). Visual and verbal modes of representation in electronically mediated communication: the potentials of new forms of text. In I. Snyder (Ed.), Page to screen: Taking literacy into the electronic era (pp. 5-79). London, England: Routledge.

Kress, G., & van Leeuwen, T. (1996). Reading images. London, England: Routledge.

Kress, G., & van Leeuwenn, T. (2001). Multimodal discourse: The modes and media of contemporary communication. London, England: Hodder Education.

Lankshear, C. & Knobel, M. (2007). Sampling “the new” in New Literacies. In M. Knobel & C. Lankshear (Eds.), A new literacies sampler (pp. 1-24). New York, NY: Peter Lang.

Leander, K. (2009). Composing with old and new media: toward parallel pedagogy. In V. Carrington & M. Robinson (Eds.), Digital literacies: Social learning and classroom practices (pp. 147-164). London, England: Sage.

Mahiri, J. (2006). Digital DJ-ing: Rhythms of learning in an urban school. Language Arts, 84(1), 55-62.

McVee, M. B., Bailey, N. M., & Shanahan, L. E. (2008). Using digital media to interpret poetry: Spiderman meets Walt Whitman. Research in the Teaching of English, 43(2), 112-143.

McVee, M. B., Bailey, N. M., & Shanahan, L. E. (2012). The (artful) deception of technology integration and the move toward a new literacies mindset. In S. M. Miller & M. B. McVee (Eds.), Multimodal composing in classrooms: Learning and teaching is digital world (pp. 13-31). New York, NY: Routledge.

Merriam, S.B. (1998). Qualitative research and case study applications in education. San Francisco, CA: Jossey-Bass.

Miller, S.M. (2008). Teacher learning for new times: Repurposing new multimodal literacies and digital video composing for schools. In J. Flood, S.B. Heath, & D. Lapp (Eds.) Handbook of research in teaching literacy through the communicative and visual arts. (Vol. 2; pp. 441-453). New York, NY: Lawrence Erlbaum.

Miller, S.M., & Borowicz. (2006). Why multimodal literacies? Designing digital bridges to 21st century teaching and learning. Buffalo, NY: GSE Publications.

Miller, S.M., & McVee, M (Eds.) (2012). Multimodal composing in classrooms: Learning and teaching in the digital world. New York, NY: Routledge.

Murray, L. (2010). Authenticity and realism in documentary sound. The Soundtrack, 3(2), 131-137.

Myers, J., & Beach, R. (2001). Hypermedia authoring as critical literacy. Journal of Adolescent and Adult Literacy, 44(6), 538-546.

New London Group. (1996). A pedagogy of multiliteracies: Designing social futures. Harvard Educational Review, 66(1), 60-92.

Pea, R. (1993). Practices of distributed intelligence and designs for education. In G. Salomon (Ed.), Distributed cognition: Psychologoical and educational considerations. (pp. 47-87). Cambridge, England: CUP.

Perkins, M. (1983). Sensing the world. Indianapolis, IN: Hackett.

Ranker, J. (2008). Making meaning on the screen: Digital video production about the Dominican Republic. Journal of Adolescent and Adult Literacy, 51(5), 410-422.

Ranker, J. (2009). Learning nonfiction in an ESL class: The interaction of situated practice and teacher scaffolding in a genre study. Reading Teacher, 62(7), 580-589.

Shanahan, L.E. (2006). Reading and writing multimodal texts through information and communication technologies. Unpublished doctoral dissertation, University at Buffalo/The State University of New York.

Sider, L. (2003). If you wish to see, listen: The role of sound design. Journal of Media Practice. 4(1), 5-15.

Snyder, T.D., Tan, A.G., & Hoffman, L. (2006). Digest of education statistics 2005 (NCES 2006-030). Washington, DC: U.S. Government Printing Office.

Underwood, M. (2008). I wanted an electronic silence…: Musicality in sound design and the influences of new music on the process of sound design for film. The Soundtrack, 1(3), 193-210.

Unsworth, L. (2001). Teaching multiliteracies across the curricula. Buckingham, England: Open University Press.

van Leeuwen, T. (1999). Speech, music, sound. London, England: Macmillian.

Winn, W.D. (1993). Perception principles. In M. Fleming, & W.H. Levie (Eds.), Instructional message design: Principles from the behavioral and cognitive sciences (2nd ed.; pp. 55-126). Englewood Cliffs, NJ: Educational Technology.

Yantac, A.E., & Ozcan, O. (2006). The effects of the sound-image relationship within sound education for interactive design. Digital Creativity, 17(2), 91-99.

Yost, W.A. (1993). Overview: Psychoacoustics. In W.A. Yost, A.N. Popper, & R.R. Fay (Eds), Human psychophysics (pp. 1-12). New York, NY: Springer.

Zammit, K. (2010). The new learning environments framework: scaffolding the development of multiliterate students. Pedagogies, 5(4), 325-337.

Author Notes:

This research was supported in part by the Center for Literacy and Reading Instruction at the University at Buffalo/SUNY. (www.CLARI.buffalo.edu)

Lynn Shanahan

University at Buffalo

Email: [email protected]

![]()