A webquest can be defined as “an inquiry-oriented activity in which some or all of the information that learners interact with comes from resources on the Internet” (Dodge, 1997, para. 2). Webquests provide a way to make use of the Internet, incorporating sound learning strategies. Rather than simply pointing students to websites that may encourage them to cut and paste, well-structured webquests direct students to Internet resources that require the use of critical thinking skills and a deeper understanding of the subject being explored (March, 2003).

The way a webquest activity is designed discourages students from simply surfing the Internet in an unstructured manner. A webquest is constructed and presented in six parts called building blocks: introduction, tasks, process, resources, evaluation, and conclusion. Similar to a lesson plan, a webquest organizes students’ learning experiences using these building blocks and allows the teacher to evaluate learning outcomes.

Student centered and inquiry based, the webquest is generally constructed around a scenario of interest to students who work in small groups by following the steps in the webquest model to examine the problems, propose hypotheses, search for information with the Web links provided by the instructor, analyze and synthesize the information using guided questions, and present solutions to the problems. (Zheng, Perez, Williamson, & Flygare, 2008, p. 296).

The critical attributes of a webquest activity include an introduction that sets the stage and provides some background information, a task that is doable and motivating, a set of web-linked information sources needed to complete the task, a description of the process the learners should go through to accomplish the task, some guidance on how to organize the information, and a conclusion that brings closure to the quest and reminds participants of what they have learned. (Dodge, 1997). The webquest method has been widely adopted in K-16 education (Zheng et al., 2008). Since its inception, the webquest model has been embraced by many educators, and consequently, numerous webquests have been created by teachers for all grade levels (MacGregor & Lou, 2004/2005).

One reason webquests have gained popularity is because they can be adapted by teachers. To design a successful webquest, teachers need to ‘‘compose explanations, pose questions, integrate graphics, and link to websites to reveal a real-world problem” (Peterson & Koeck, 2001, p. 10). Teachers report that the experience of designing and implementing webquests helps them ‘‘discover new resources, hone technology skills, and gain new teaching ideas by collaborating with colleagues’’ (p. 10).

Since webquests challenge students’ intellectual and academic ability rather than their simple web searching skills, they are said to be capable of increasing student motivation and performance (March, 2004), developing students’ collaborative and critical thinking skills (Perkins & McKnight, 2005), and enhancing students’ abilities to apply what they have learned to new learning (Pohan & Mathison, 1998). Thus, webquests have been widely adopted and integrated into K-12 and higher education curricula (Zheng, Stucky, McAlack, Menchana, & Stoddart, 2005) and several staff development efforts (Dodge, 1995).

Many studies have been conducted to determine the effects of webquests on teaching and learning in different disciplines and grade levels. Researchers have claimed that webquest activities create positive attitudes and perceptions among students (Gorrow, Bing, & Royer, 2004; Tsai, 2006), increase the learners’ motivation (Abbit & Ophus, 2008; Tsai, 2006), foster collaboration (Barroso & Clark, 2010; Bartoshesky & Kortecamp, 2003), enhance problem-solving skills, higher order thinking, and connection to authentic contexts (Abu-Elwan, 2007; Allan & Street, 2007; Lim & Hernandez, 2007), and assist in bridging the theory to practice gap (Laborda, 2009; Lim & Hernandez, 2007).

Although the Internet houses thousands of webquests, the quality of these webquests varies (Dodge, 2001; March, 2003). As a matter of fact, some of them may not be considered as real webquests (March, 2003). March asserted that a good webquest must be able to “prompt the intangible aha experiences that lie at the heart of authentic learning”(March, 2003, p. 42). Both Dodge (2001) and March (2003) indicated that a careful evaluation is needed before adapting a webquest to be used in classroom with students.

Webquests open the possibility of involving students in online investigations without requiring students to spend time searching for relevant materials. The advantage of webquests is the ability to plan and implement learning experiences with teacher identified relevant and credible websites with which the students can work confidently. Considering their increasing use, it is important for educators to be able to find and use high quality webquests.

Although webquests show great promise for enhancing student learning and motivation, the results of using webquests as teaching and learning tools may depend on how well webquests are designed in the first place. One of the biggest problems is that anybody using the right tools can create and publish a webquest online. Unlike books and journal articles that are reviewed and edited before they are published, no formal evaluating process exists to limit what goes on the Internet as a webquest. The result is a large number of webquests, which makes separating high-quality from low-quality webquests difficult. Therefore, careful and comprehensive evaluation of webquest design is an essential step in the decisions to use webquests. Due to their structure, rubrics provide a powerful means by which to judge the quality of webquests.

Evaluation of Webquests With Rubrics

Rubrics are scoring tools that allow educators to assess different components of complex performances or products based on different levels of achievement criteria. The rubric tells both instructor and student what is considered important when assessing (Arter & McTighe, 2001; Busching, 1998; Perlman, 2003).

One widely cited benefit of rubrics is the increased consistency of judgment when assessing performance and authentic tasks. Rubrics are assumed to enhance the consistency of scoring across students and assignments, as well as between raters. Another frequently mentioned benefit is the possibility of providing valid assessment of performance that cannot be achieved through the use of traditional written tests. Rubrics allow validity when assessing complex competencies without sacrificing reliability (Morrison & Ross, 1998; Wiggins, 1998). Another important benefit of using rubrics is the promotion of learning. In other words, rubrics are used as both assessment and teaching tools. This effect is presented in research on various types of assessment such as formative, self, peer, and summative assessment. The explicit criteria and standards that are essential building blocks of rubrics provide students with informative feedback, which in turn, promote student learning (Arter & McTighe, 2001; Wiggins, 1998).

Although many rubrics have been developed for evaluating webquests, only three are widely used. Dodge (1997) listed six critical attributes for a webquest, which was later revised and converted to a webquest evaluation rubric (Bellofatto, Bohl, Casey, Krill, & Dodge, 2001). This rubric is designed to evaluate the overall aesthetics, as well as the basic elements of a webquest. Every category is evaluated according to three levels: Beginning, Developing, and Accomplished. Every cell is worth a number of points. A teacher can score every category of the webquest using these three levels and come up with a score out of a total of 50 points characterizing the usefulness of a webquest.

March (2004) created a rubric for evaluating webquest design called a Webquest Assessment Matrix that has eight criteria (Engaging Opening/Writing, the Question/Task, Background for Everyone, Roles/Expertise, Use of the Web, Transformative Thinking, Real World Feedback, and Conclusion). One unique aspect of this evaluation rubric is that it does not have specific criteria for Web elements such as graphics and Web publishing. March suggested that one person’s cute animated graphic can be another’s flashing annoyance. The rubric includes eight categories, each of which is evaluated according to three levels; Low (1 point), Medium (2 points), and High (3 points). The maximum score is 24 points.

The enhancing Missouri’s Instructional Networked Teaching Strategies (eMINTS, 2006) National Center also created a rubric based on Dodge’s work. Webquest creators are asked to use this rubric to evaluate their webquest design before submitting it for eMINTS evaluation. The eMINTS National Center then evaluates submissions and provides a link on their website to the webquests that score 65 or more points out of 70 points. Teachers must first use the webquest in their classrooms before submitting it for evaluation. If teachers cannot use the webquest in their own classroom, implementation in another grade-appropriate classroom is viewed as acceptable.

Approved webquests join the permanent collection of eMINTS’s national database of resources for educators. These resources are available to all educators. eMINTS provides professional development programs for teachers in which these resources are shared with all teachers. Therefore, having a webquest accepted by eMINTS provides recognition for webquest creators.

While these webquest design evaluation rubrics are being used by many educators, there have also been discussions and suggestions regarding certain elements of these rubrics. For example, Maddux and Cummings (2007) discussed the lack of focus on the learner and recommended the addition of “learner characteristics” to the Rubric for Evaluating Webquests (Bellofatto et al., 2001):

The rubric ‘Rubric for Evaluating Webquests’ did not contain any category that would direct a webquest developer to consider any characteristics of learners, such as age or cognitive abilities. Instead, the rubric focused entirely on the characteristics of the webquest, which does nothing to ensure a match between webquest’s cognitive demands and learner characteristics, cognitive or otherwise. (p. 120)

Finally, they suggested that teachers who develop and use webquests should be mindful of students’ individual differences, including but not limited to age, grade, and cognitive developmental level. To remind teachers of the importance of these considerations, Dodge’s (1997) second item in his list of webquests’ critical attributes should be modified from “a task that is doable and interesting” to “a task that is doable, interesting, and appropriate to the developmental level and other individual differences of students with whom the webquest will be used” (p. 124).

Webquest design evaluation rubrics are mainly created to help educators identify high-quality webquests from a pool of thousands. This evaluation must be credible and trustworthy and grounded in evidence (Wiggins, 1998). In other words, an assessment rubric should be independent of who does the scoring and yield similar results no matter when and where the evaluation is carried out. The more consistent the scores are over different raters and occasions, the more reliable is the assessment (Moskal & Leydens, 2000).

The current literature provides examples of rubrics that are used to evaluate the quality of webquest design. However, reliability of webquest design rubrics has not yet been presented in the literature. This study aims to fill that gap by assessing the reliability of a webquest evaluation rubric, which was created by using the strengths of the currently available rubrics and making improvements based on the comments provided in the literature and feedback obtained from the educators.

Reliability

Assessments have implications and lead to consequences for those being assessed (Black, 1998), since they frequently drive the pedagogy and the curriculum (Hildebrand, 1996). For example, the high stakes testing in schools has driven teachers and administrators to narrow the curriculum (Black, 1998). They also shape learners’ motivations, their sense of priorities, and their learning tactics (Black, 1998).

Ideally, an assessment should be independent of who does the scoring, and the results should be similar no matter when and where the assessment is carried out, but this goal is hardly attainable. There is “nearly universal” agreement that reliability is an important property in educational measurement (Colton et al., 1997, p. 3). Many assessment methods require raters to judge some aspect of student work or behavior (Stemler, 2004). The designers of assessments should strive to achieve high levels of reliability (Johnson, Penny, & Gordon, 2000). Two forms of reliability are considered significant. The first form is interrater reliability, which refers to the consistency of scores assigned by multiple raters. The second is intrarater reliability, which refers to the consistency of scores assigned by one rater at different points of time (Moskal, 2000).

Interrater Reliability

Interrater reliability refers to “the level of agreement between a particular set of judges on a particular instrument at a particular time” and “provide[s] a statistical estimate of the extent to which two or more judges are applying their ratings in a manner that is predictable and reliable” (Stemler, 2004). Raters, or judges, are used when student products or performances cannot be scored objectively as right or wrong but require a rating of degree (Stemler, 2004).

Perhaps the most popular statistic for calculating the degree of consistency between judges is the Pearson correlation coefficient (Stemler, 2004).

One beneficial feature of the Pearson correlation coefficient is that the scores on the rating scale can be continuous. Like the percent-agreement statistic, the Pearson correlation coefficients can be calculated only for one pair of judges at a time and for one item at a time. Values greater than .70 are typically acceptable for consistency estimates of interrater reliability (Barrett, 2001; Glass & Hopkins, 1996; Stemler, 2004). In situations where multiple judges are used, Cronbach’s alpha can be used to calculate the interrater reliability estimates (Crocker & Algina, 1986). Cronbach’s alpha coefficient is used as measure of consistency when evaluating multiple raters on ordered category scales (Bresciani, Zeln, & Anderson, 2004). If the Cronbach’s alpha estimate is low, then the variance in the scores is due to error (Crocker & Algina, 1986).

Intrarater (Test-Retest) Reliability

Intrarater (test-retest) reliability refers to the consistency of scores assigned by one rater at different points of time (Carol, Deana, & Donald, 2007; Moskal & Leydens, 2000). Unlike measures of internal consistency that indicate the extent to which all of the questions that make up a scale measure the same construct, the test-retest reliability coefficient indicates whether or not the instrument is consistent over time and over multiple administrations.

In the case of a rubric, this would mean the same group of evaluators evaluating subjects using the same rubrics on two different occasions. If the correlation between the scores obtained from two separate administrations of the evaluation with the rubric is high, then the rubric is considered to have high test-retest reliability. The test-retest reliability coefficient is simply a Pearson correlation coefficient for the relationship between the total scores for the two administrations. Additionally, intraclass correlation coefficient (ICC) is used when consistency between ratings from the same raters are evaluated.

We developed a webquest rubric and investigated its reliability using multiple measures. The following section describes the process through which the webquest evaluation rubric was created and used for evaluation of three webquests by multiple evaluators. Results of reliability analyses on the rubric and discussions of findings are presented.

Procedures

Construction of the ZUNAL Webquest Evaluation Rubric

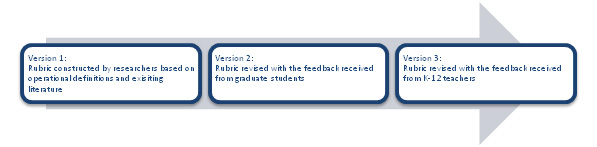

The ZUNAL rubric was developed in three stages (Figure 1). First, a large set of rubric items was generated based on the operational definitions and existing literature on currently available webquest rubrics (version 1). This step included item selections from the three most widely used rubrics created by Bellofatto et al. (2001), March (2004), and eMints (2006). In addition, the studies that critiqued current webquest rubrics and offered suggestions were also considered for item selection and modification (Maddux & Cummings, 2007). As a result of this process, this first version of the ZUNAL rubric was created around nine categories (version 1, Appendix A).

Second, graduate students (n = 15) enrolled in a course titled Technology and Data were asked to determine the clarity of each item on a 4-point scale ranging from 1 (not at all) to 6 (very well/very clear). They were also asked to supply written feedback for any items that were either unclear or unrelated to the constructs. Items were revised based on the feedback (version 2, Appendix A). Finally, K-12 classroom teachers (n = 23) who are involved with webquest creation and implementation in classrooms were invited for a survey that asked them to rate rubric elements for their value and clarity. Items were revised based on the feedback (final version, Appendix B).

Figure 1. Construction of the ZUNAL webquest evaluation rubric.

Figure 1. Construction of the ZUNAL webquest evaluation rubric.

At the conclusion of this three-step process, the webquest evaluation rubric was composed of nine subscales with 23 indicators underlying the proposed webquest rubric constructs: title (4 items), introduction (1 item), task (2 items), process (3 items), resources (3 items), evaluation (2 items), conclusion (2 items), teacher page (2 items) and overall design (4 items). A 3-point response scale including unacceptable, acceptable, and target was utilized (see Appendix B).

Application of the ZUNAL Webquest Evaluation Rubric

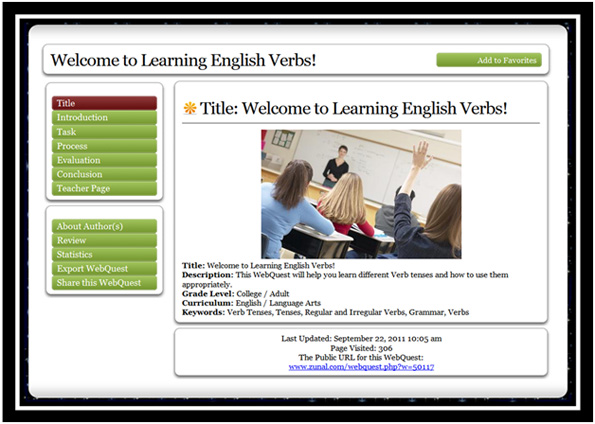

Upon completion, the rubric was added as a module to one of the most commonly used webquest builder applications, ZUNAL (www.zunal.com) for pilot testing. Founded in 2001, ZUNAL is a web application that is available on the Internet for educators to create and publish webquests. It has more than 160,000 members and accepts approximately 300 new users daily. Currently, the ZUNAL database has over 48,000 completed and published webquests by its users. Figure 2 is a screenshot of a webquest published in ZUNAL.

Figure 2. A sample Webquest at ZUNAL.

Figure 2. A sample Webquest at ZUNAL.

Because of its high number of webquests and users, we selected this web application for finding webquests to evaluate with the new rubric and for recruiting volunteers for reliability testing. After being added to the website, the rubric was tested in different operating systems (Windows, Mac, and UNIX) and Internet browsers (Internet Explorer, Firefox, Chrome, etc.). It was also tested by researchers so that any webquest selected from ZUNAL could easily be evaluated online using the rubric available as a module.

Selection of the Webquests

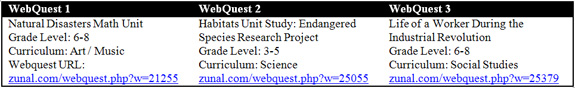

Three webquests were selected for evaluation by the researchers from the web application, ZUNAL in order to investigate the reliability of the rubric. ZUNAL already has a basic five-star rating system, where users and visitors post their ratings and comments for each webquest. Even though the reliability of this review system was not measured, it allowed us to determine the popularly conceived quality of webquests to be selected.

In order to ensure the inclusion of webquests with varying quality, we randomly selected a low quality (1 and 2 stars, Webquest 1), a medium quality (3 and 4 stars, Webquest 2) and a high quality (5 stars, Webquest 3) webquest for the study (Figure 3).

Figure 3. Websites selected for evaluation.

Figure 3. Websites selected for evaluation.

Invitation for Evaluation

Following the selection of the three webquests, an online invitation survey was created and sent to ZUNAL users. The survey provided the description of the study, consent form, and questions for determining the eligibility for participation in the study. Only users that already had a webquest published at ZUNAL with a higher than three-star review were selected and invited. Of the 118 selected users, 23 agreed to participate in the study.

Participant Demographics

Seven males (30%) and 16 females (70%) participated in the study, and their ages ranged from 26 to 53 (M = 39.34, SD = 7.95). Eleven participants were K-12 teachers, 8 participants were college faculty members from both public and private universities, and 4 participants were College of Education graduate students from various colleges. All of the participants had published a webquest with a four- or five-star rating on the ZUNAL webquest builder site.

Evaluation of Webquests

The 23 participants were given a week to evaluate the three webquests using the latest version of the rubric. The participants were not informed about the selection process and the varying quality of the three webquests in order to eliminate biased evaluations. They were told that all three webquests were selected randomly regardless of topic, grade level, and star ratings.

A month later, the evaluators were asked to reevaluate the same webquests. In order to encourage their participation, we offered to upgrade their ZUNAL account for unlimited webquest creation. We selected a 30-day time span between the evaluations, because choosing less than a month would have led to high correlation scores. However, extending the time span beyond a month would have led to a lower return rate, since these were voluntary evaluations. The rubric scores on each webquest were entered into SPSS 17.0 for reliability analysis.

Results

Reliability

A series of statistical procedures were employed to investigate the internal consistency and intrarater reliability of the ZUNAL webquest evaluation rubric. First we averaged all raters’ ratings on each webquest and assigned an overall mean score to first and second ratings of each webquest. Second, we correlated the mean scores of the first and second ratings of the three webquests. Table 1 provides descriptive information about the first and second ratings of each webquest (complete data set is included in Appendix C). The first and second rating scores, calculated based on all raters’ ratings, were similar with small standard deviations.

Table 1

Descriptive Information on First and Second Ratings

Rating | N | Mean | SD |

| WQ1 First rating | 23 | 1.93 | .054 |

| WQ1 Second rating | 23 | 1.96 | .055 |

| WQ2 First rating | 23 | 2.48 | .054 |

| WQ2 Second rating | 23 | 2.51 | .074 |

| WQ3 First rating | 23 | 2.74 | .083 |

| WQ3 Second rating | 23 | 2.76 | .079 |

| Note: WQ = webquest. Rating Scale: Unacceptable = 1; Acceptable = 2; Target = 3. | |||

Internal Consistency. First, Cronbach’s alpha was calculated for the first and second rating of all three webquests to assess the internal consistency of the webquest evaluation instrument. Cronbach’s alpha reliability coefficient normally ranges between 0 and 1. However, there is actually no lower limit to the coefficient. The closer Cronbach’s alpha coefficient is to 1.0, the greater the internal consistency of the items in the scale. In this study, Cronbach’s alpha on the first and second ratings of the webquests using the aforementioned rubric was .956 and .953, respectively, indicating a high level of internal consistency in the evaluations.

Intrarater Reliability. A Pearson correlation was conducted to examine test-retest consistency between the first and second ratings of each webquest, as presented in Table 2. Averaged first and second ratings on each webquest were significantly and highly correlated.

Table 2

Pearson Correlations on Averaged First and Second Ratings

Rating | WQ1 | WQ2 | WQ3 |

| WQ1 First rating | .851** | ||

| WQ2 First rating | .606** | ||

| WQ3 First rating | .860** | ||

| Note: WQ = webquest. ** Correlations significant at the 0.01 level. | |||

Moreover, a Pearson correlation was conducted as a test-retest reliability measure to examine the consistency between the first and second ratings of each evaluator on each webquest (Table 3).

Table 3

Pearson Correlation on Individual Raters’ First and Second Ratings

Rater/WQ | WQ1 | WQ2 | WQ3 |

1 | .875** | .878** | 1.00** |

2 | .880** | .820** | .872** |

3 | .851** | .829** | .913** |

4 | .937** | 1.00** | .872** |

5 | .801** | 1.00** | .900** |

6 | .821** | .807** | 1.00** |

7 | .904** | .940** | .907** |

8 | .938** | .842** | .798** |

9 | 1.00** | 1.00** | .907** |

10 | 1.00** | .939** | 1.00** |

11 | 1.00** | .940** | .889** |

12 | .937** | .832** | .692** |

13 | 1.00** | .942** | .917** |

14 | 1.00** | 1.00** | .799** |

15 | .881** | .939** | .900** |

16 | .929** | .845** | 1.00** |

17 | .938** | .885** | 1.00** |

18 | 1.00** | .940** | .889** |

19 | .929** | .940** | 1.00** |

20 | .938** | .920** | .889** |

21 | .937** | .939** | .799** |

22 | .937** | .875** | .845** |

23 | 1.00** | .729** | .697** |

| Note. WQ = webquest. **Correlation is significant at the 0.01 level (2-tailed) | |||

As the table indicates, the evaluators’ two evaluations of the same webquest, 1 month apart, yielded high and significant correlations ranging from .697 to 1.00. As an additional measure of test-rest reliability, intraclass correlation coefficients (random, absolute) were calculated on each evaluation of each webquest, see Table 4.

Table 4

Interclass Correlation Coefficients for Single Measures

Webquest | Intraclass Correlation Coefficients |

| Webquest 1 | |

| Evaluation 1 | .825 |

| Evaluation 2 | .797 |

| Webquest 2 | |

| Evaluation 1 | .793 |

| Evaluation 2 | .760 |

| Webquest 3 | |

| Evaluation 1 | .673 |

| Evaluation 2 | .766 |

Discussion and Conclusions

Rubrics appeal to educators and learners for many reasons. They are powerful tools for both teaching and assessment, and they can improve student performance, as well as monitor it, by making teachers’ expectations clear and showing students how to meet those expectations. The result is often marked improvements in the quality of student work and learning. Although rubrics are used frequently in evaluating whether a webquest is well designed, none of the research has focused on assessing the quality of rubrics as measurement instruments (i.e., their reliability and validity). Clearly, establishing that a rubric is a reliable measure of the quality of a webquest is desirable. The rubric’s reliability is of particular concern to educators who integrate technology in education using webquests. A reliable webquest evaluation rubric can not only allow educators to evaluate the webquests available to them on the Internet before using them in their classroom, but also guide them when they attempt to create their own webquests.

We undertook development of the ZUNAL webquest rubric and investigated its reliability using multiple measures. To our knowledge, this is the first study to fully assess the reliability of a webquest design evaluation rubric. The rubric was created to use the strengths of the currently available rubrics and improved based on the comments provided in the literature and feedback received from the educators. The outcome of the process was a comprehensive rubric that took into account the technical, pedagogical, and aesthetic aspects of webquests as well as characteristics of learners. Because webquests are used in schools and classrooms for educational purposes, a rubric should take into account not only the subject matter and its presentation, but also the characteristics of the learners who will work with the webquest.

The statistical analyses conducted on the ZUNAL webquest rubric pointed to its acceptable reliability. It is reasonable to expect that the consistency in the rubric scores was due to the comprehensiveness of the rubric and clarity of the rubric items and descriptors. Because we were not able to locate any existing studies focusing on reliability of webquests design rubrics, we were unable to make comparisons at this point.

It is important to note that two of the elements (title and keywords) in the given rubric seem to be common elements of a webquest design process that might seem to be easily achievable, therefore, leading to high reliability scores. Researchers and other educators participating in the study considered these elements carefully, constructing sophisticated criteria for each, because they are as crucial as other elements of the rubric. To illustrate, an educator who created a webquest on the life cycle of a butterfly can name the webquest “life cycle of a butterfly” or “life cycles” or “butterflies” or perhaps “A life science webquest.” Only the first title points to the content of the webquest; therefore, only the first title would be considered acceptable according to the ZUNAL rubric. This feature is especially important in the context of educators searching for and using webquests available on the Internet. Without relevant titles, educators can spend much longer time than necessary to find what they are looking for.

As there are limitations with all research, this study is no exception. This study is limited to 23 evaluators who each evaluated three webquests at various quality levels. Increasing the number of participants and number of webquests can enhance the generalization of the results. Second, webquests selected for evaluation were selected from a single source (www.zunal.com). Choosing webquests that are not template based from other sources can also help increase the generalization. Third, this rubric evaluates only the design of the webquest. It does not measure the learning process from the webquests and content learning.

This study provides a first webquest design rubric with initial reliability data analysis. Future studies can replicate this study. Also, similar studies on webquests from other websites using the ZUNAL rubric can provide more generalizable reliability of this rubric.

References

Abbit, J., & Ophus, J. (2008). What we know about the Impacts of webquests: A review of research. AACE Journal, 16(4), 441-456.

Abu-Elwan, R. (2007). The use of webquest to enhance the mathematical problem-posing skills of pre-service teachers. International Journal for Technology in Mathematics Education, 14(1), 31-39.

Allan, J., & Street, M. (2007). The quest for deeper learning: an investigation into the impact of a knowledge pooling webquest in primary initial teacher training. British Journal of Educational Technology, 38(6),1102-1112.

Arter, J., & McTighe, J. (2001). Scoring rubrics in the classroom. Thousand Oaks, CA: Corwin Press Inc.

Barrett, P. (2001, March). Assessing the reliability of rating data. Retrieved from the author’s personal website: http://www.pbarrett.net/presentations/rater.pdf

Barroso, M., & Clara, C. (2010). A webquest for adult learners: A report on a biology course. In J. Sanchez & K. Zhang (Eds.), Proceedings of the World Conference on E-Learning in Corporate, Government, Healthcare, and Higher Education 2010 (pp. 1566-1569). Chesapeake, VA: Association for the Advancement of Computers in Education.

Bartoshesky, A., & Kortecamp, K. (2003). WebQuest: An instructional tool that engages adult learners, promotes higher level thinking and deepens content knowledge. In C. Crawford et al. (Eds.), Proceedings of Society for Information Technology & Teacher Education International Conference 2003 (pp. 1951-1954). Chesapeake, VA: Association for the Advancement of Computers in Education.

Bellofatto, L., Bohl, N., Casey, M., Krill, M., & Dodge, B. (2001). A rubric for evaluating webquests. Retrieved from the San Diego State University Webquest website: http://webquest.sdsu.edu/webquestrubric.html

Black, P. (1998). Testing: friend or foe? London, England: Falmer Press.

Bresciani, M. J., Zelna, C. L., & Anderson, J. A. (2004). Techniques for assessing student learning and development: A handbook for practitioners. Washington, DC: National Association of Student Personnel Administrators.

Busching, B. (1998). Grading inquiry projects. New directions for teaching and Learning, 74, 89-96.

Carol A. S., Deana M. N, & Donald J. L. (2007). Using the ASSIST short form for evaluating an information technology application: Validity and reliability issues. Informing Science Journal, 10(1). Retrieved from http://www.inform.nu/Articles/Vol10/ISJv10p107-119Speth104.pdf

Colton, D. A., Gao, X., Harris, D. J., Kolen, M. J., Martinovich-Barhite, D., Wang, T., & Welch, C. J. (1997). Reliability issues with performance assessments: A collection of papers (ACT Research Report Series 97-3). Iowa City, IA: American College Testing Program.

Cortina J. M. (1993). What is coefficient alpha? An examination of theory and applications, Journal of Applied Psychology 78, 98-104.

Crocker, L., & Algina, J. (1986). Introduction to classical and modern test theory. Orlando, FL: Harcourt Brace Jovanovich.

Dodge, B. (1995). WebQuests: A technique for Internet based learning. Distance Educator, 2, 10-13.

Dodge, B. (1997). Some thoughts about webquests. Retrieved from

http://webquest.sdsu.edu/about_webquests.html

Dodge, B. (2001). FOCUS: Five rules for writing a great webquest. Learning and Leading with Technology, 28(8), 6-9, 58.

enhancing Missouri’s Instructional Networked Teaching Strategies. (2006). Rubric/scoring guide for webquests. Retrieved from www.emints.org/wp-content/uploads/2011/11/rubric.doc

Glass, G. V., & Hopkins, K. H. (1996). Statistical methods in education and psychology. Boston, MA: Allyn and Bacon.

Gorrow, T., Bing, J., & Royer, R. (2004, March). Going in circles: The effects of a webquest on the achievementand attitudes of prospective teacher candidates in education foundations. Paper presented at the international conference of the Society for Information Technology and Teacher Education International 2004, Atlanta, GA.

Hildebrand, G. (1996). Redefining achievement. In P. Murphy & C. Gipps (Eds.), Equity in the classroom: Towards effective pedagogy for girls and boys (pp. 149-172). London, England: Falmer.

Johnson, R. L., Penny, J., & Gordon, B. (2000). The relation between score resolution methods and interrater reliability: An empirical study of an analytic scoring rubric. Applied Measurement in Education, 13, 121–138.

Laborda, J. G. (2009). Using webquests for oral communication in English as a foreign language for tourism studies. Educational Technology & Society, 12(1),258–270.

Lim, S., & Hernandez, P. (2007). The webquest: An illustration of instructional technology implementation in MFT training. Contemporary Family Therapy, 29, 163-175.

MacGregor, S. K., & Lou, Y. (2004/2005). Web-based learning: How task scaffolding and web site design support knowledge acquisition. Journal of Research on Technology in Education, 37(2),161-175.

Maddux, C.D., & Cummings, R. (2007). WebQuests: Are they developmentally appropriate? The Educational Forum, 71(2),117-127.

March, T. (2003). The learning power of webquests. Educational Leadership 61(4)42-47.

March, T. (2004). Criteria for assessing best webquests. Retrieved from the BestWebQuests University website: http://bestwebquests.com/bwq/matrix.asp

Morrison, G. R., & Ross, S. M. (1998). Evaluating technology-based processes and products. New Directions for Teaching and Learning, 74, 69-77.

Moskal, B.M. (2000). Scoring rubrics: What, when, and how? Practical Assessment Research and Evalution, 7(3). Retrieved from http://pareonline.net/getvn.asp?v=7&n=3

Moskal, B., & Leydens, J.A. (2000). Scoring rubric development: validity and reliability. Practical Assessment, Research & Evaluation, 7(10). Retrieved from http://pareonline.net/getvn.asp?v=7&n=10

Perkins, R., & McKnight, M. L. (2005). Teachers’ attitudes toward webquests as a method of teaching. Computers in the Schools, 22(1/2),123-133.

Perlman, C.C. (2003). Performance assessment: Designing appropriate performance tasks and scoring rubrics. In J. E. Wall & G. R. Walz (Eds.), Measuring up: Assessment issues for teachers, counselors, and administrators (pp. 497-506) Greensboro, NC: ERIC Counseling and Student Services Clearinghouse and National Board of Certified Counselors. Retrieved from ERIC database. (ED480070)

Peterson, C. L., & Koeck, D. C. (2001). When students create their own webquests. Learning and Leading with Technology, 29(1),10–15.

Pohan, C., & Mathison, C. (1998). WebQuests: The potential of Internet based instruction for global education. Social Studies Review, 37(2),91-93.

Stemler, S. E. (2004). A comparison of consensus, consistency, and measurement approaches to estimating interrater reliability. Practical Assessment, Research & Evaluation, 9(4). Retrieved from http://pareonline.net/getvn.asp?v=9&n=4

Tsai, S. (2006, June). Students’ perceptions of English learning through EFL webquest. Paper presented at the World Conference on Educational Multimedia, Hypermedia and Telecommunications 2006, Orlando, FL.

Watson, K.L. (1999). WebQuests in the middle school. Meridian, 2(2). Retrieved from http://www.ncsu.edu/meridian/jul99/downloads/webquest.pdf

Wiggins, G. (1998). Educative assessment. San Francisco, CA: Jossey-Bass.

Zheng R., Perez, J., Williamson, J., & Flygare, J. (2008). WebQuests as perceived by teachers: Implications for online teaching and learning. Journal of Computer Assisted Learning, 24(4), 295-304.

Zheng R., Stucky B., McAlack M., Menchana M., & Stoddart S. (2005) Webquest learning as perceived by higher education learners. TechTrends 49, 41–49.

Author Note

Zafer Unal

University of South Florida, Petersburg

email: [email protected]

Yasar Bodur

Georgia Southern University

email: [email protected]

Aslihan Unal

Usak University

TURKEY

email: [email protected]

Appendix A

ZUNAL WebQuest Rubric Changes on Version 1

- Row 4. Added Criteria 1d. “Keywords”

- Row 6. Added “culminating project” was replaced by “the end/culminating project” on Criteria 3a.

- Row 8. Added Criteria 3d. “Cognitive Level of Learners”.

- Row 13. Added “googlemap” in the wording of Criteria 5b.

- Row 14. Added a sentence to the end of Criteria 5c.

- Row 18. Added Criteria 7b. “Further Study and Transformative Learning”.

- Row 23. Added Criteria 9c. “Consistent Look and Feel”.

- Row X. Removed Criteria named “Images”. This criterion is now embedded in 9a “Use of Graphics”.

- Row X. Removed Criteria named “Assessment”. This criterion is now embedded in 6a as “evaluation”.

- Cognitive Level of Task and Cognitive Level of Learners were separated.

Changes on Version 2.

- Row 8. Added a descriptive sentence on Criteria 3d “(age, social/culture…).

- Row 10. Replaced “and” with “and/or” to the first level of Criteria 4c.

- Row 13. Added “by topic, section, group or individual” in the wording of Criteria 5b.

- Row 13. Added “description or labels given for each source” in the wording of Criteria 5b.

- Row 15. Added “use of rubric or checklist, reflection of project, pre-post assessments, quiz etc.” in the wording of Criteria 6a.

- Row 19. Added “too many” to the Criteria 8a.

- Row 22. Added “that distract from the meaning” to the Criteria 9b.

- Row 24. Added “and Use of Tables” to the Criteria 9d.

Appendix B

ZUNAL WebQuest Rubric (Final Version)

| Title Page | Unacceptable (1) | Acceptable (2) | Target (3) | |

| 1 | 1. Criteria 1a. Title | No title given for the webquest, or selected title is completely irrelevant to the webquest. | Title is given for the webquest, and somewhat relevant to the topic. | Title is given for the webquest, and very relevant to the topic. |

| 2 | Criteria 1b. Description | No description given for the webquest, or description is completely irrelevant is very brief. | Webquest description is provided but does not provide adequate summary of webquest. | Webquest description provides a detailed summary of webquest. |

| 3 | Criteria 1c. Grade Level | No grade level range is assigned to this webquest, or selected grade level is not appropriate for the webquest. | Grade level range is selected for the webquest and somewhat appropriate for the webquest. | Grade level range is selected for the webquest and very appropriate for the webquest. |

| 4 | Criteria 1d. Keywords | No keywords are provided for this webquest, or selected keywords are irrelevant to the webquest. | Keywords are provided for this webquest, and selected keywords are somewhat relevant to the webquest. | Keywords are provided for this webquest, and selected keywords are very relevant to the webquest. |

| Introduction | Unacceptable (1) | Acceptable (2) | Target (3) | |

| 5 | Criteria 2a. Motivational Effectiveness of Introduction | Introduction is purely factual, with no appeal to learners’ interest or a compelling question or problem. | Introduction relates somewhat to the learners’ interests and/or describes a compelling question or problem. | Introduction draws the reader into the lesson by relating to the learners’ interests or goals and engagingly describes a compelling essential question or problem. |

| Task | Unacceptable (1) | Acceptable (2) | Target (3) | |

| 6 | Criteria 3a. Clarity of Task | After reading the task, it is still unclear what the end/culminating project of the webquest will be. | The written description of the task adequately describes the end/culminating project, but does not engage the learner. | The written description of the end/culminating product describes clearly the goal of the webquest. |

| 7 | Criteria 3b. Cognitive Level of Task | Task does not require synthesis of multiple sources of information (transformative thinking). It is simply collection of information or answers from web. | Task requires synthesis of multiple sources of information (transformative thinking) but is limited to in its significance and engagement. | Task requires synthesis of multiple sources of information (transformative thinking) and it is highly creative, goes beyond memorization, and engaging. |

| 8 | Criteria 3d. Cognitive Level of Learners | Task is not realistic, not doable, and not appropriate to the developmental level and other individual differences (age, social/culture, and individual differences) of students with whom the WebQuest will be used. | Task is realistic, doable, but limited in its appropriateness to the developmental level and other individual differences (age, social/culture, and individual differences) of students with whom the WebQuest will be used. | Task is realistic, doable, and appropriate to the developmental level and other individual differences (age, social/culture, and individual differences) of students with whom the WebQuest will be used. |

| Process | Unacceptable (1) | Acceptable (2) | Target (3) | |

| 9 | Criteria 4a. Clarity of Process | Process page is not divided into sections or pages where each group/team or student would know exactly where they were in the process and what to do next. Process is not clearly organized. | Process page is divided into sections or pages where each group/team or student would know exactly where they were in the process and what to do next. Process is organized with specific directions that also allow choice/creativity. | Process page is divided into sections or pages where each group/team or student would know exactly where they were in the process and what to do next. Every step is clearly stated. |

| 10 | Criteria 4b. Scaffolding of Process | Activities are not related to each other and/or to the accomplishment of the task. | Some of the activities do not relate specifically to the accomplishment of the task. | Activities are clearly related and designed from basic knowledge to higher level thinking. |

| 11 | Criteria 4c. Collaboration | The process provides only few steps, no collaboration or separate roles required. | Some separate tasks or roles assigned. More complex activities required. | Different roles are assigned to help students understand different perspectives and/or share responsibility in accomplishing the task. |

| Resources | Unacceptable (1) | Acceptable (2) | Target (3) | |

| 12 | Criteria 5a. Relevance and Quality of Resources | Resources (web links, files etc.) are too limited, too many and/or too irrelevant for students to accomplish the task. | Resources (web links, files etc.) are sufficient but some resources are not appropriate (do not add anything new or contains irrelevant resources). | There is a clear and meaningful connection between all the resources and the information needed for students to accomplish the task. Every resource carries its weight. |

| 13 | Criteria 5b. Quality of Resources | Resources (web links, files, etc.) do not lead to credible/trustable information. They do not encourage reflection such as interactivity, multiple perspectives, multimedia, current information such as use of googlemap, interactive databases, timelines, photo gallery, games/puzzles etc. | Resources (web links, files, etc.) are credible but they only provide facts. They do not encourage reflection such as interactivity, multiple perspectives, multimedia, current information such as use of googlemap, interactive databases, timelines, photo gallery, games/puzzles etc. | Resources (web links, files, etc.) are credible and provide enough meaningful information for students to think deeply with interactivity, multiple perspectives, multimedia, current information such as use of googlemap, interactive databases, timelines, photo gallery, games/puzzles etc. |

| 14 | Criteria 5c. Organization of Resources | Resources are not organized or listed in a meaningful way (by topic, section, group or individual task). They are rather thrown all over with no reference. Students would not know exactly what resources are for what purposes (no description, or labels). | Resources are organized/listed in a meaningful way (by topic, section, group or individual task) but still some students might be confused as to know exactly what resources are for what purposes (no description, or labels given for each resource). | Resources are organized/listed in a meaningful way (by topic, section, group or individual task). Students would know exactly what resources are for what purposes (description, or labels given for each resource). |

| Evaluation | Unacceptable (1) | Acceptable (2) | Target (3) | |

| 15 | Criteria 6a. Clarity of Evaluation | Criteria for success are not described. Students have no idea how they or their work will be evaluated/judged. | Criteria for success are stated but webquest does not apply multiple assessment strategies (use of rubric or checklist, reflection of project, pre-post assessments, quiz etc.). | Criteria for success are clearly stated and webquest applies multiple assessment strategies (use of rubric or checklist, reflection of project, pre-post assessments, quiz etc.). |

| 16 | Criteria 6b. Relevancy of Evaluation | No connection between the learning goals and standards to be accomplished at the end of webquest and evaluation process. The evaluation instruments does not measure what students must know and be able to do to accomplish the task. | Limited connection between the learning goals and standards to be accomplished at the end of webquest and evaluation process. The evaluation instruments does not clearly measure what students must know and be able to do to accomplish the task. | Strong connection between the learning goals and standards to be accomplished at the end of webquest and evaluation process. The evaluation instrument clearly measures what students must know and be able to do to accomplish the task. |

| Conclusion | Unacceptable (1) | Acceptable (2) | Target (3) | |

| 17 | Criteria 7a. Summary | No conclusion is given to present a summary of what was/were learned at the end of the activity or lesson. | Conclusion is given but does not give enough information of what was/were learned at the end of the activity or lesson. | Conclusion is given but with detailed information of what was/were learned at the end of the activity or lesson. |

| 18 | Criteria 7b. Further Study and Transformative Learning | No further message, idea, question or resources are given to encourage learners to extend their learning and transfer to other topics. | Provides a message, idea, question or/and additional resources to encourage learners to extend their learning but it is not clear how the students’ new knowledge can transfer to other topics. | Provides a message, idea, question or/and additional resources to encourage learners to extend their learning and clearly relates how the students’ new knowledge can transfer to other topics. |

| Teacher Page | Unacceptable (1) | Acceptable (2) | Target (3) | |

| 19 | Criteria 8a. Standards | Common core curriculum standard(s) are not listed for the webquest or listed curriculum standard(s) are irrelevant, too many. | Common core curriculum standard(s) are listed in words, not only numbers, and they are relevant, but the link(s) back to the standards website is missing. | Common core curriculum standard(s) are listed in words, not only numbers, and they are relevant and the link(s) back to the standards website is given. |

| 20 | Criteria 8b. Credits | Credits / references are not given for any of the content used from external resources (graphics, clipart, backgrounds, music, videos etc.). | Credits / references are not given for all of the content used from external resources (graphics, clipart, backgrounds, music, videos etc.). | Credits / references are given for all of the content used from external resources (graphics, clipart, backgrounds, music, videos etc.). |

| Overall Design | Unacceptable (1) | Acceptable (2) | Target (3) | |

| 21 | Criteria 9a. Use of Graphics | Inappropriate selection and use of graphic elements (irrelevant, distracting and/or overuse of images.). The graphics are not supportive of the webquest and do not give students information or perspectives not otherwise available. | Appropriate selection and use of graphic elements (relevant, not distracting and/or not overused) but the graphics are not supportive of the webquest and do not give students information or perspectives not otherwise available. | Appropriate selection and use of graphic elements (relevant, not distracting and/or not overused). The graphics are supportive of the webquest and give students information or perspectives not otherwise available. |

| 22 | Criteria 9b. Spelling and Grammar | There are serious spelling and/or grammar errors in this webquest that distract from the meaning and don’t model appropriate language. | There are some minor spelling or grammar errors but they are very limited and do not distract from the meaning. | The spelling and grammar has been checked carefully and there are no errors. |

| 23 | Criteria 9c. Consistent Look and Feel | The webquest does not have a consistent look and feel (fonts, colors etc.) and does not provide consistent working navigation from page to page. | The webquest has somewhat consistent look and feel (fonts, colors etc.) and provides somewhat consistent working navigation from page to page. | The webquest has a consistent look and feel (fonts, colors etc.) and provides consistent working navigation from page to page. |

| 24 | Criteria 9d. Working Links and Use of Tables | There are serious number of broken links, misplaced or missing images, badly sized tables etc. that makes webquest ineffective to navigate. | There are some broken links, misplaced or missing images, badly sized tables but does not make webquest ineffective to navigate. | No mechanical problems noted. |

![]()